Proxies for Web Scraping: Enhance Your Data Collection

In the world of web data extraction, it's often tough to keep up with the latest methods to block scraping. That's where a good proxies for web scraping comes in handy. This guide will show you how these servers can be your secret tool for easily getting the web data you need. By using a proxies for web scraping, you can really improve your data collection and take your projects to the next level.

Understanding Proxies for Web Scraping

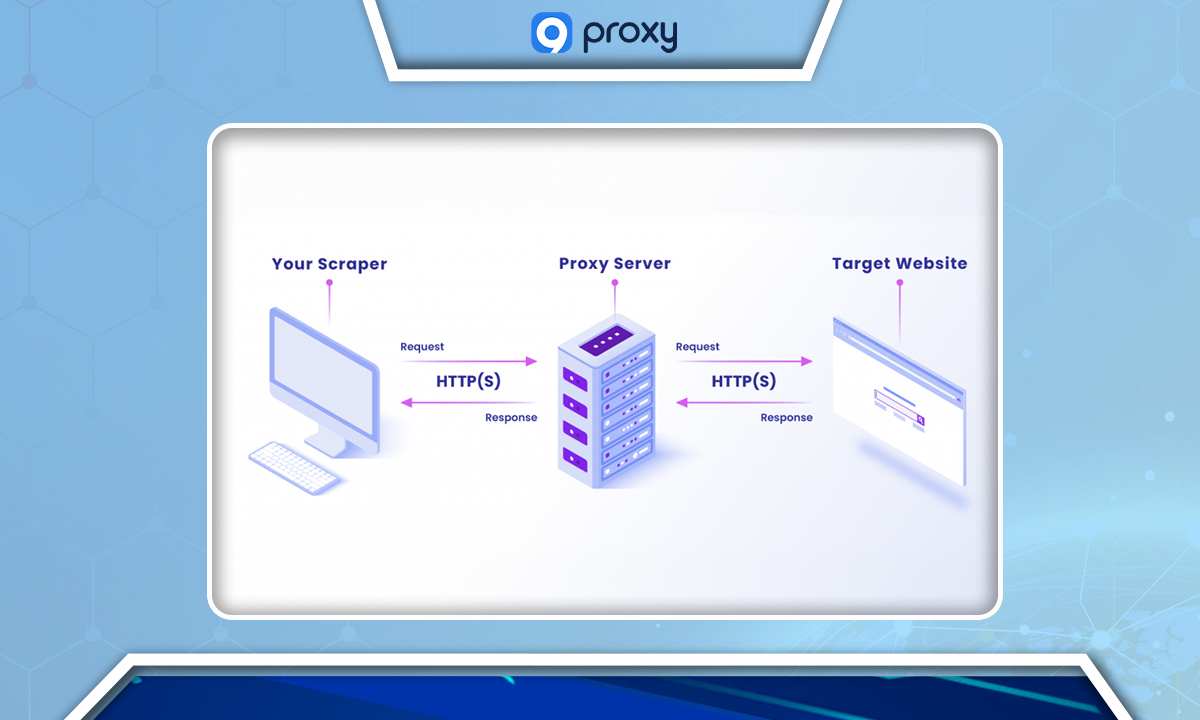

A proxy server is a tool that functions as an intermediary between your computer and the internet. When you're web scraping—which means automatically gathering data from websites—this server requests data on your behalf, hiding your computer's identity from the websites. This is useful for privacy and can help avoid being blocked by the website's anti-scraping measures.

There are two main types of proxy protocols: HTTP and SOCKS. The HTTP proxy is more common and integrates seamlessly with web browsing tools, making it a go-to choice for many web scraping activities. SOCKS proxies, on the other hand, are generally faster, more reliable, and offer better security. They work well for more complex scraping tasks, where speed and stability are crucial.

Types of Proxies for Web Scraping

When undertaking web scraping, the type of proxy you select is crucial for gathering data efficiently and effectively. Here's an overview of different proxy types:

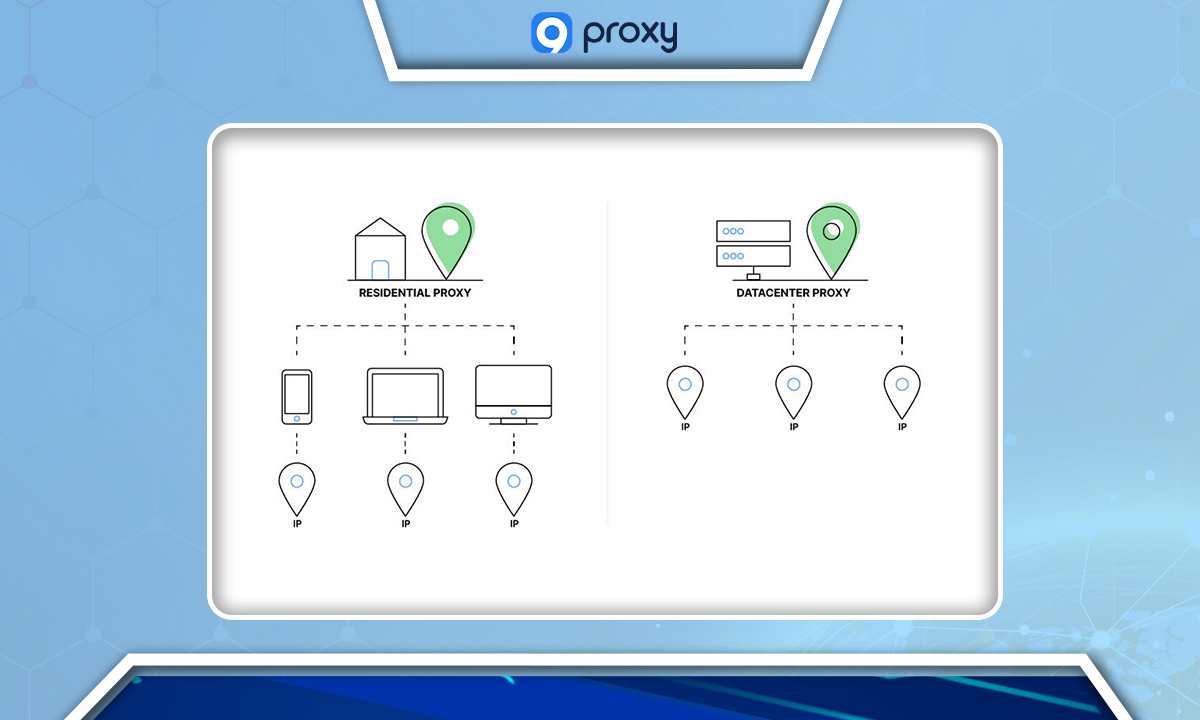

Residential Proxies

These proxies use IP addresses provided by internet service providers (ISPs), making them appear as if they are coming from a standard home internet connection. Because they blend in with regular user traffic, residential proxies are excellent for navigating sites with strict security measures, reducing the risk of detection and blocking.

Datacenter Proxies

Datacenter proxies offer IP addresses from data centers. They're not associated with ISPs and are prized for their speed and affordability. Although they are more prone to detection due to their non-residential nature, they excel in tasks where quick, bulk data collection is needed and the website security is not as rigorous.

Rotating Proxies

Rotating proxies change your IP address at regular intervals or with every new request. This frequent change makes it hard for websites to track and block the scraper, providing an effective solution for dealing with websites that have strong anti-bot systems in place.

Dedicated Proxies

Dedicated proxies are private and are assigned to a single user. They offer a stable scraping experience and minimize the chances of being blocked, as the IP reputation remains consistent. These proxies are ideal for long-term projects that require reliability.

Shared Proxies

These are used by multiple users at the same time and are the most economical option. While they are cost-effective, the risk of being blacklisted is higher due to the shared usage. They are suitable for less intensive scraping tasks where the budget is a constraint.

Why Should You Use a Proxy Scraper?

Utilizing a proxy server when web scraping can be highly beneficial for several reasons:

- Anonymity and Security: Proxies conceal your actual IP address, which can prevent the target website from identifying and blocking your scraping attempts. This layer of anonymity enhances your security, allowing you to access and collect data without revealing your identity.

- Avoiding Blocks and Bans: With a proxy, you can route your requests through various IP addresses. This diversity can help to bypass restrictions or bans that websites may impose on scrapers, particularly crucial when dealing with sophisticated sites that have mechanisms to detect and block scraping activities.

- Geographical Flexibility: Proxies can make requests appear as if they are coming from different geographical locations. This capability is particularly useful when you need to access location-specific content, such as regional pricing or products on e-commerce websites.

- Enhanced Privacy and Control: By hiding your machine's IP address, proxies provide an additional privacy shield. If you use your own in-house proxies, you also gain complete control over your scraping process, ensuring data privacy and enabling your engineers to manage the scraping activities more effectively.

- Bypassing Content Restrictions: Proxies can be instrumental in circumventing content restrictions, including those based on geography. They enable access to content that might otherwise be inaccessible due to location-based blocking implemented by the website.

How to Choose a Proxies for Web Scraping

Selecting the ideal proxy scraper is pivotal for the success of your data collection. Here are the factors you should consider:

- Type of Proxies: Understand the different proxy types—residential, datacenter, rotating, dedicated, and shared. Choose based on the scraping task’s requirements.

- Security and Reliability: Opt for proxy servers that offer robust security features, including encryption, to protect your data. Reliability is equally important; proxies should have minimal downtime to avoid interruptions in your scraping activities.

- Cost and Budget: Your budget will influence your choice. While free proxies are an option, they typically lack speed and reliability. Paid proxies offer better performance and support, but costs vary. Balance the price against your project's budget and the quality of service you need.

- Integration with Scraping Tools: The proxy should work well with your scraping software or scripts. Compatibility issues can lead to inefficiencies, so check for seamless integration to ensure smooth operations.

- Speed and Performance: The proxy’s speed is vital—slow proxies can lead to timeouts and data collection delays. Use benchmarking tools to test proxy speeds before committing.

Tools for Web Scraping with a Proxy Server

When web scraping with a proxy server, there are several tools available that can help with evaluating proxies and executing web scraping tasks effectively:

Selenium

Selenium is a suite of tools for automating web browsers. It allows you to mimic human-like interactions with websites, which can be particularly useful for complex scraping tasks. Selenium can be configured to work with proxies, enabling you to scrape with anonymity.

Scrapy

Scrapy is an open-source Python framework designed for web crawling and scraping. It allows for proxy management and can handle various types of requests and anti-scraping mechanisms, making it a robust choice for serious scraping needs.

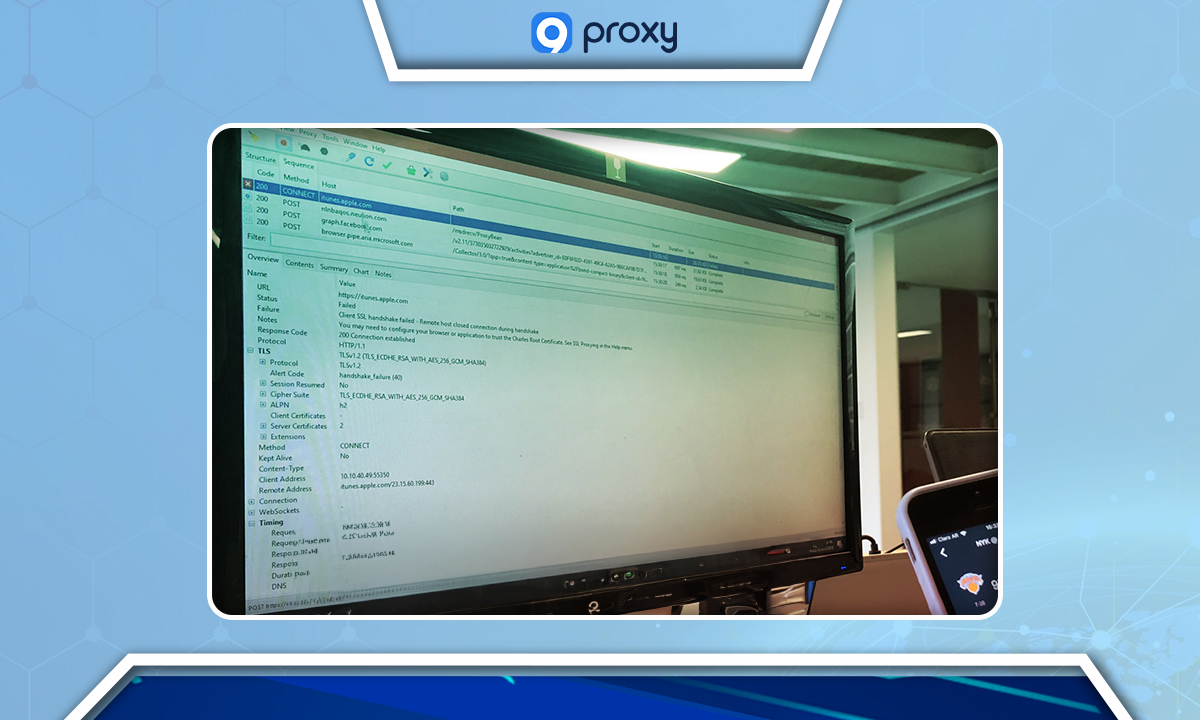

Charles Proxy

Charles is an HTTP proxy/HTTP monitor/Reverse Proxy that enables a developer to view all of the HTTP and SSL/HTTPS traffic between their machine and the Internet. This includes requests, responses, and the HTTP headers (which contain the cookies and caching information). It's particularly useful for developers to test and debug their web scraping tools.

Proxy Checker

Proxy Checker is a standalone tool that can be used to validate the functionality of proxy servers. It can test multiple proxies simultaneously and provide details on their speed and anonymity level, helping you to select the best proxies for your scraping project.

FAQ

How many proxy servers do I need for web scraping?

The number depends on your scraping volume and the target website's restrictions. Generally, divide your hourly request rate by 500 to estimate the needed proxy count. For large-scale scraping, several proxies are often necessary to mimic natural browsing and avoid detection.

How do proxy servers affect the speed of web scraping?

Proxy servers can impact scraping speed. High-quality paid proxies often offer better speeds and reliability, whereas free or low-quality proxies can slow down the scraping process due to poor performance or overcrowded servers.

Is it possible to use VPNs instead of proxies for web scraping?

VPNs can be used for web scraping to change your IP address, but they are generally less flexible than proxies when it comes to IP rotation and managing multiple IP addresses. Proxies offer more granular control which is often necessary for efficient and large-scale web scraping.

Conclusion

In the detailed task of data collection, the proxies for web scraping stands as an essential ally, adeptly leading you through the web's numerous digital defenses. With the insights offered in our guide, you are now prepared to select and use the right proxy, making your web scraping both effective and discreet. As you convert data into valuable insights, remember, this is just the start. Explore more about proxies and improve your scraping methods by reading expert content from 9Proxy.

Get Newsletters About Everything Proxy-Related